Pluggable Observability for Semantic Workflows: cognee × Keywords AI

We love building graph-native, data-aware AI systems. But when you stitch together multiple async tasks, agents, and data pipelines, your system can become a black box, with behavior that’s hard to trace, measure, and debug.

You need span‑level visibility across workflows—without littering your code with tracing boilerplate or getting locked into a tool.

That’s why we’ve designed cognee / Memory for AI agents to run on modular, scalable pipelines. Our workflows load data, create semantic graph nodes, and trigger downstream tasks. We wanted clean, consistent tracing across all of it—and Keywords AI, via its lightweight keywordsai-tracing SDK, gives us exactly that, with a single decorator: @observe.

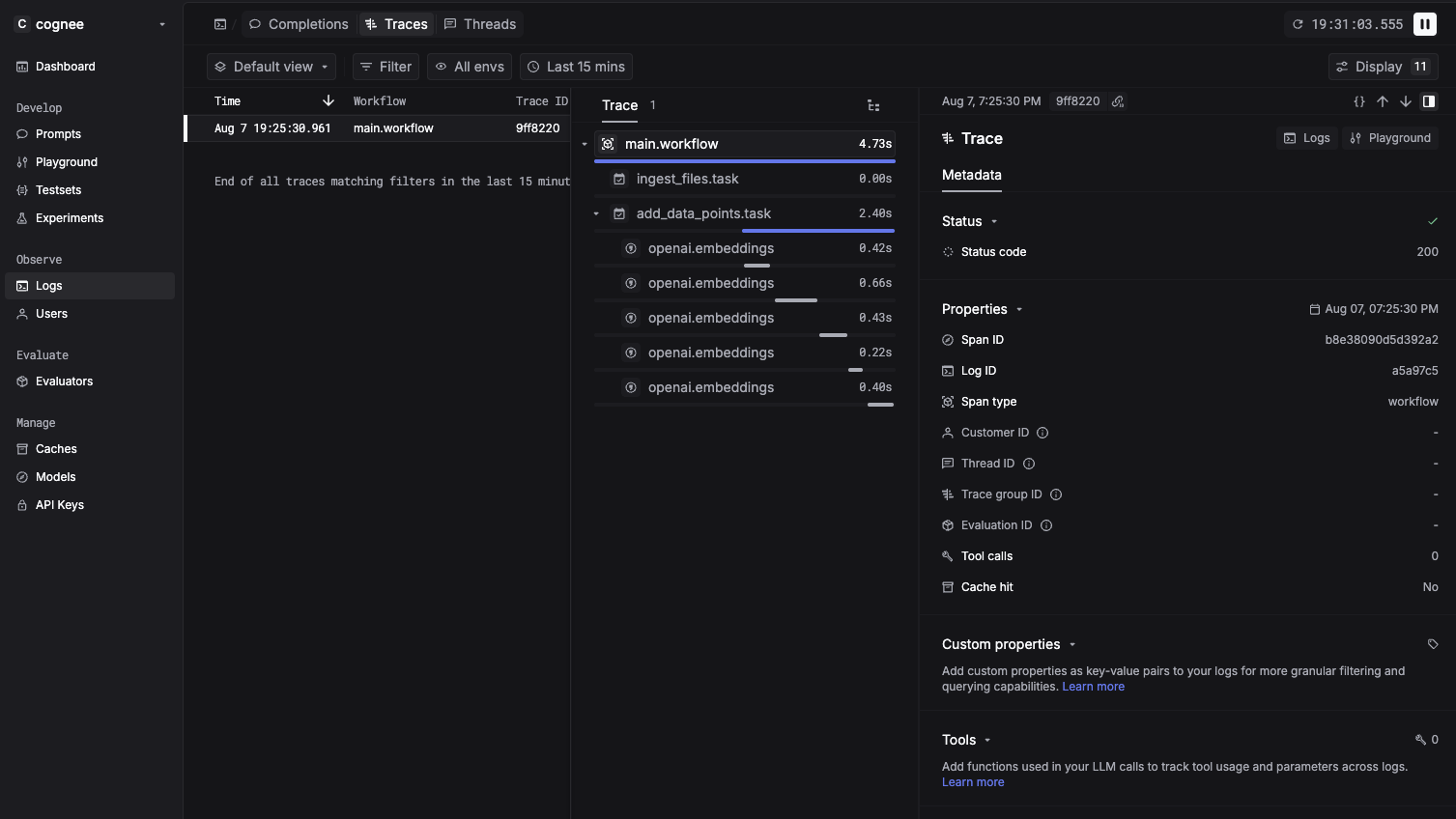

Keywords AI / LLM observability for AI teams provides real‑time traces, logs, and metrics across LLM calls and agent runs: task/workflow spans, token and latency stats, and error visibility. Wrapping our async pipelines with @observe gives every span a meaningful name and stable ID—so when something veers off course, we can debug fast, and with zero changes to our business logic.

In this article, we’ll cover how cognee standardizes observability, how the Keywords AI adapter works, and give you a practical example you can run by yourself locally.

Who This Is For:

- ML/agent teams who need to see every tool call and model hop.

- Platform/SRE folks who want consistent tracing across services.

- Developers who want observability without vendor lock‑in or heavy integration work.

Outcome: Name every span. Follow every hop. Fix issues faster.

One Decorator, Many Backends: cognee’s Pluggable Observability

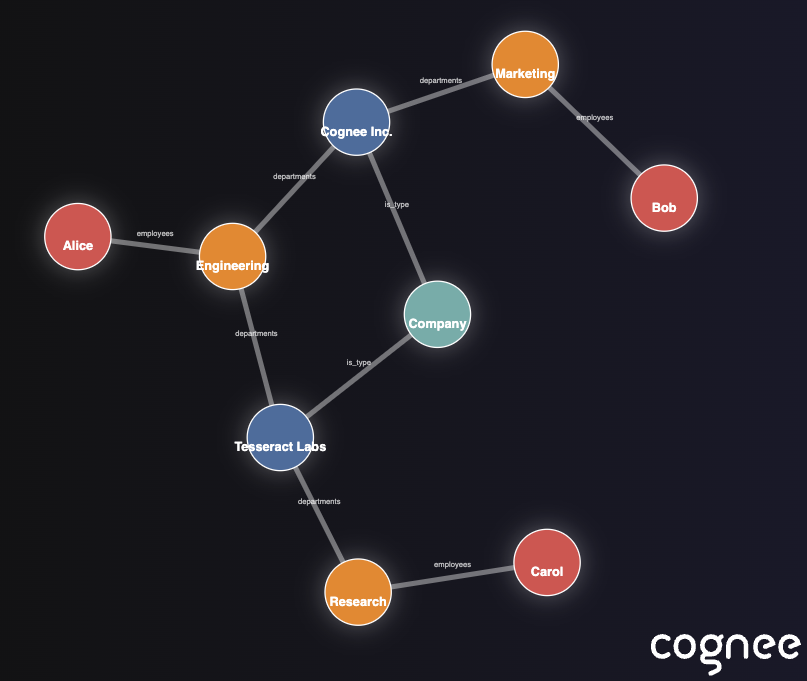

cognee is an open source memory engine with a semantic graph at its core. You use tasks and workflows via its Python SDK to manipulate DataPoint models based on your data and to connect them into a knowledge graph. For observability, cognee exposes a single surface: @observe.

- Unified abstraction: Decorate tasks with @observe; decorate workflows with @observe(workflow=True).

- Pluggable backends: Choose your monitoring tool via config or environment variables.

- Zero vendor lock-in: The interface remains the same regardless of the telemetry provider.

Integrating Keywords AI: Spanning with a Single Import

cognee-community is our extension hub. Adapters for third‑party tools and databases, pipelines, and community‑contributed tasks live here. Hosting integrations in this repo gives you:

- Community license and independent evolution: Adapters can iterate at their own pace, independently of core releases.

- Slim, targeted installs: Pull in only what you need, avoiding heavyweight drivers for users who don’t need them.

- Seamless interoperability with core: A small registration shim wires the package into cognee’s abstraction layer.

- Predictable layout: Under *packages/**, each subfolder is a self‑contained provider implemented in the same pattern you’ll follow.

Keywords AI integration lives in the community repo as cognee-community-observability-keywordsai.

Note on the approach: The adapter intentionally monkey‑patches get_observe() at import time. This keeps the @observe surface stable while letting you swap backends via config.

Quick Start (3 Minutes)

Prerequisites:

- Python 3.10+

- Keywords AI API key

- LLM API key (e.g., OpenAI)

- A clean virtual environment

1) Clone the community repo:

- Get your Keywords AI API key here and set the following environment variables:

- Navigate to the Keywords AI integration directory and install dependencies:

Prefer pip? Use your env’s installer to install this package and its extras.

- The adapter patches cognee’s get_observe() at import time, so you get Keywords AI spans with no code changes to your tasks:

Under the hood, get_keywordsai_observe() maps the cognee @observe surface to Keywords AI’s @task and @workflow decorators:

- Automatic telemetry bootstrap: KeywordsAITelemetry() initializes the SDK once.

- Named spans: Use @observe(name="my_span") to override defaults.

- Tip: Use @observe(name="ingest_files") to enforce consistent span naming.

Example: Trace Every Step in a cognee Pipeline

To demonstrate how Keywords AI helps trace every step in cognee, we’ve created a simple, custom pipeline that shows how to build DataPoint nodes from JSON input files (like people.json or companies.json), ingest them, and render a graph—all while emitting task and workflow spans to Keywords AI.

Key points:

- Decorate tasks: @observe creates a Keywords AI task span for ingest_files.

- Decorate workflows: @observe(workflow=True) wraps the whole run as a workflow span.

- Wrap existing functions: observe(_add_data_points) instruments an existing cognee task without changing its code.

What You Get in Keywords AI

- Workflow and task spans with consistent naming.

- Timing, errors, and context tied back to your semantic pipeline.

- No custom plumbing—instrumentation is a single decorator.

Step Into the Future: Measurable, Graph-Native AI Systems

Observability should help you move faster, not slow you down. With cognee’s unified @observe surface and Keywords AI’s lightweight tracing, you get robust telemetry for complex, graph-driven pipelines—without holding development back.

Have any questions or want to join the conversation? Drop into our Discord communities:

BAML x cognee: Type-Safe LLMs in Production

LangGraph × cognee: Enhancing Agents with Persistent, Queryable Memory