OpenAI’s GPT‑5 has arrived – What does it mean for AI memory?

OpenAI’s GPT‑5 is here, and it’s already shaking things up—especially for AI memory and context. Here’s what’s actually confirmed so far and why it matters for anyone building memory‑heavy workflows.

Before we dive in, let's set the scene with a recent tease from OpenAI's CEO, Sam Altman, that's gotten everyone hyped.

Sam Altman’s Big Tease: “Ton of Stuff to Launch…”

The GPT-5 hype train left the station on August 2, 2025, when Sam Altman posted an intriguing update on X (formerly Twitter). His message couldn’t be more clear that something big is coming.

In other words, OpenAI was gearing up for multiple launches, and the widely expected star of the show is GPT‑5. Altman’s note about “hiccups and capacity crunches” hinted that the rollout might strain systems or cause minor turbulence for users (which it did). The key takeaway: GPT‑5 is here—just not the instant August 1st drop some predicted.

So what did we learn now that GPT-5 is released? Let’s run through the things we know first.

GPT‑5 Features

OpenAI hasn’t spilled all the secrets, but through official channels and Altman’s own comments we have a decent picture of GPT‑5’s confirmed features. Here’s what’s there:

Inability to Plot Graphs

GPT-5 eval results were shown with innacurate graphs. My suspicion is that those were done on purpose in order to get OpenAI viral.

Even my German radio this morning on the way to work as I was passing a homeless tent city in Kreuzberg reported that GPT5 launched.

Even bad publicity is publicity.

This is likely just a marketing stunt.

Place for Memory

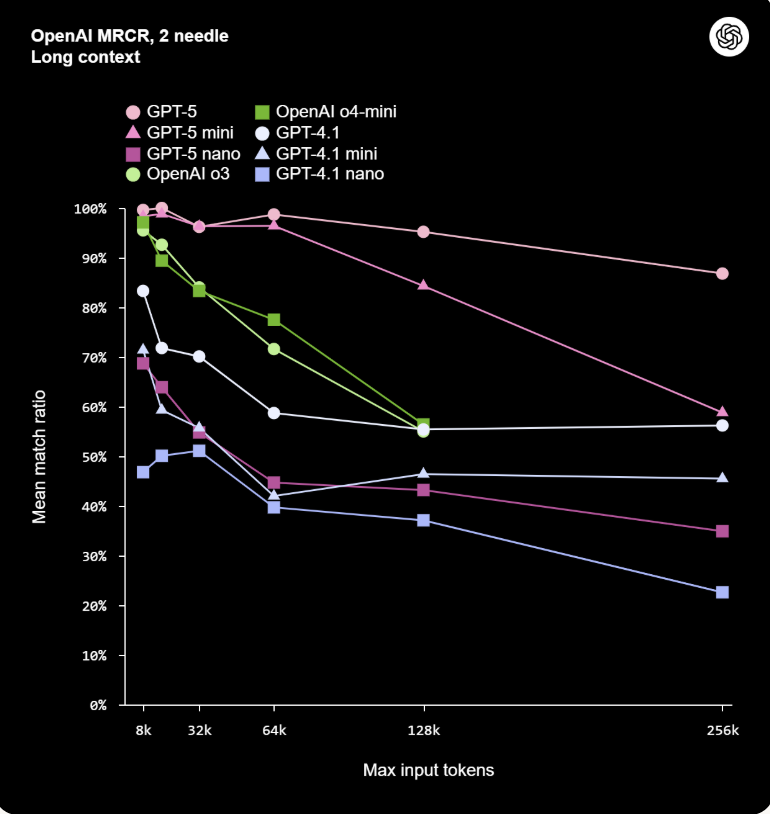

GPT‑5 is set to work better with long context windows. Still, 400,000 tokens is around 533 pages in a PDF, and the average size of a data warehouse is 250 TB.

1 TB = 1024 GB. Therefore, 250 TB = 256,000 GB.

Assuming an average PDF page size of 250 KB (0.25 MB), we can calculate:

1 GB = 1024 MB / 0.25 MB/page = 4096 pages per GB

250 TB = 256,000 GB * 4096 pages/GB = 1,048,576,000 pages

The context rot will quickly set in and the curve will go to 0.

Open Model 120B vs. GPT‑5

OpenAI has released their open model, comparable to o4. If GPT‑5 is not that much better than o4, why pay when you can use their open model? Curious to see how structured outputs work! If they do, they are canibalizing the bottom line.

Access

Single digit number of messages to use new model and long waiting lines mean that everyone is starting to try and see what is the maximum they can charge. This makes sense and follows what Cursor is doing, and reminds of the time Android flagship phones went for 350 USD until someone figured out people would pay 1200 USD for the same thing a few years later

GPT‑5 and the Future of AI Memory

Even with significant benchmark advances, LLMs continue to face substantial challenges in several critical areas where GPT-5's enhancements may not provide meaningful solutions.

AI models frequently lose track of application state during complex workflows, resulting in code suggestions that inadvertently break existing functionality or introduce inconsistencies.

While LLMs excels at generating syntactically valid code, it often fails to grasp the underlying business requirements, domain-specific rules, and critical edge cases that distinguish working software from merely functional code.

These represent the primary pain points we have seen that teams encounter with current AI tooling. While GPT-5's advances show promise, whether these improvements will meaningfully address these core developmental challenges remains an open question.