Cognee Raises $7.5M Seed to Build Memory for AI Agents

Today, I am proud to announce that Cognee has raised a $7.5 million seed round led by Pebblebed, with participation from 42CAP and Vermilion Ventures, and angel investors from Google DeepMind, n8n, and Snowplow.

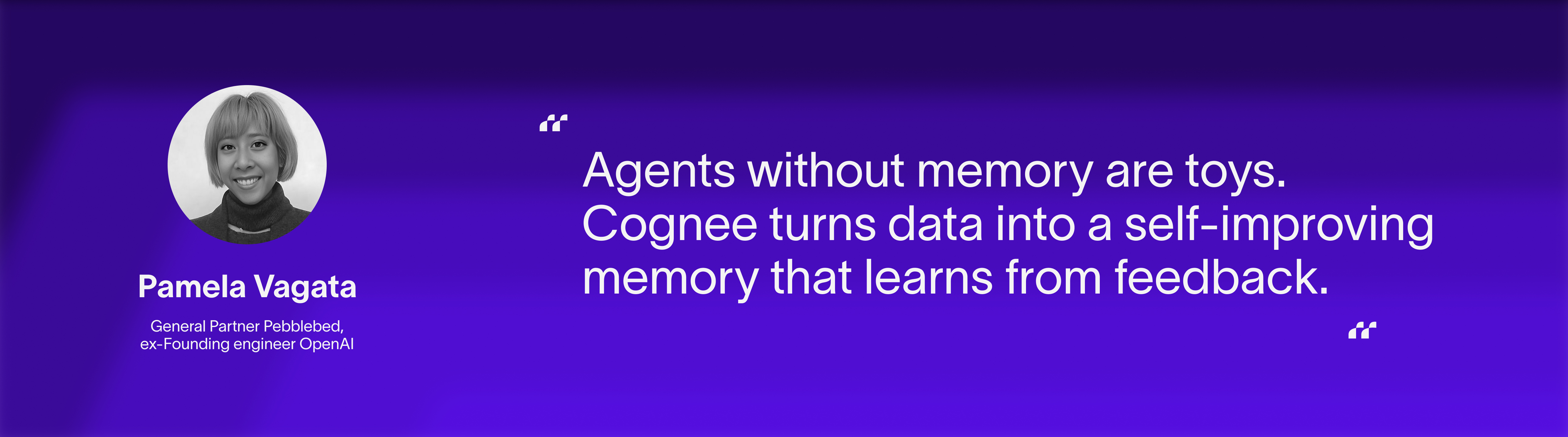

Pebblebed is led by Pamela Vagata, co-founder of OpenAI, and Keith Adams, founder of Facebook AI Research Lab. They've built foundational AI infrastructure before, and they see what we see: agents need real memory to be real products.

The Foundations of AI Memory

Before starting Cognee, I spent over a decade in big data engineering. Then I went back to study cognitive science and clinical psychology. I wanted to understand how memory actually works: how humans organize experience into knowledge, how we retrieve the right context at the right time. Around that time I tried using vector search, and immediately got an idea.

My team and I started Cognee in Berlin in 2024 with a simple question: why do AI agents forget everything between sessions? Teams were duct-taping RAG pipelines, vector stores, rules engines, and logs. They still had hallucinations and shallow outputs.

We decided to start from first principles, drawing on knowledge engineering, cognitive science, and the work of researchers at UC Berkeley and Brown. Cognee is what came out of that: a memory engine that takes agents from zero to working memory, bootstrapping durable knowledge from raw data, and enabling it to dynamically update itself over time.

From Trending Repo to Production

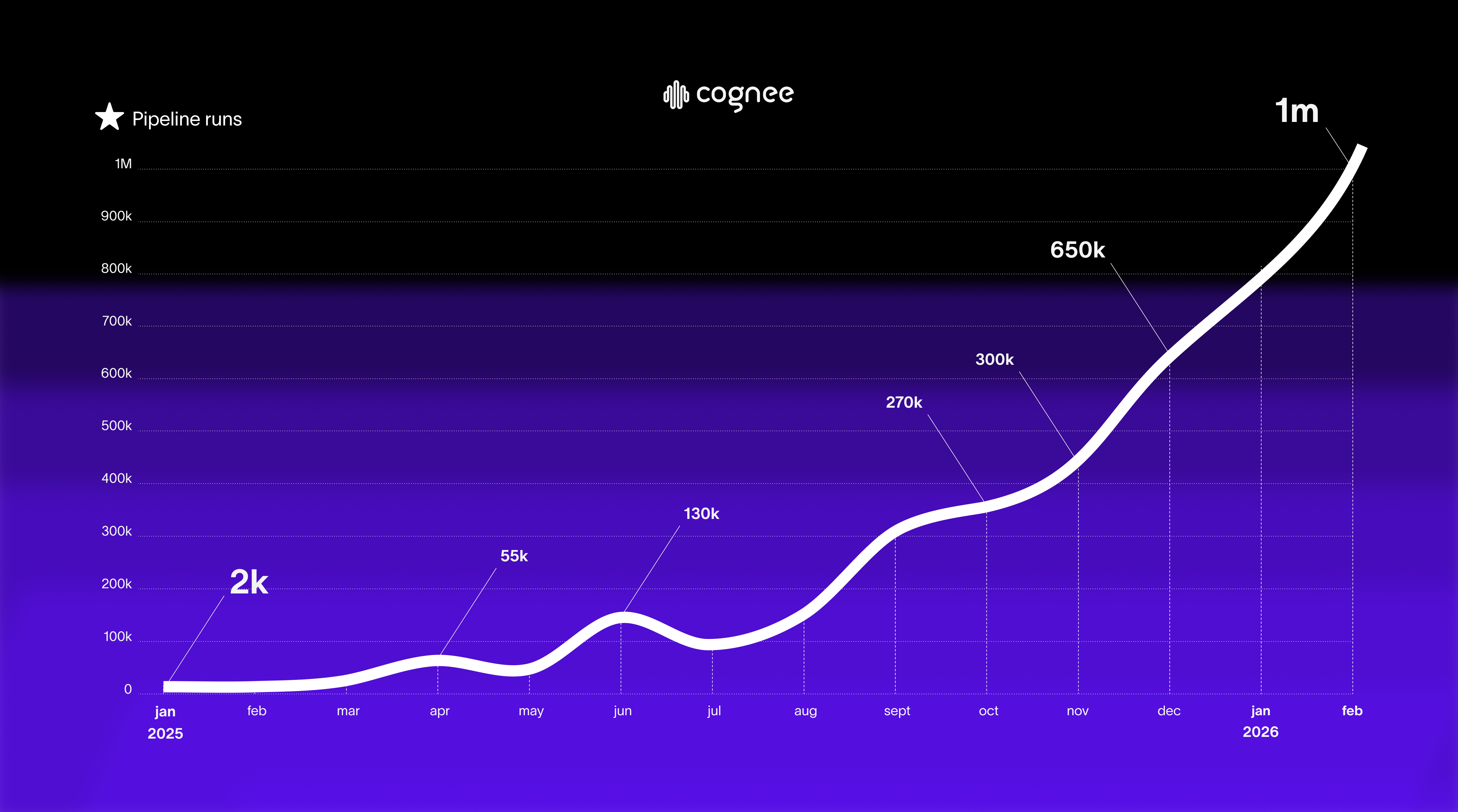

In 2025, Cognee went from an open-source experiment to production infrastructure. Our pipeline volume grew from roughly 2,000 runs to over one million. That's 500x in a single year. Today, Cognee is running live in more than 70 companies.

As of 2026, Bayer has been using Cognee to power scientific research workflows. The University of Wyoming built an evidence graph from scattered policy documents with page-level provenance. Moreover, Dilbloom and dltHub integrated Cognee into their stacks to bring structured memory to their users. Currently, our open-source project has over 12,000 GitHub stars with 80+ contributors, and we graduated from the GitHub Secure Open Source Program last August.

Agents Need Memory

Building AI agents across these teams taught us three things:

- Stateless agents hallucinate and forget. Without memory, every conversation starts from scratch. Teams spend more time patching context failures than building features.

- Good memory needs structure. Retrieval alone is not enough. Agents need temporal awareness, entity relationships, feedback loops, and the ability to self-tune.

- The agentic era demands a new primitive. Agents must store, recall, and reason over experience. Documents are inputs. Memory is what agents actually build on.

AI Memory is a category, not a feature. The investors backing this round, people who built OpenAI and Facebook AI Research, are on board with our vision.

What Cognee Does

Say you're building an agent that needs to learn from every interaction. It should connect facts, track how knowledge evolves, and get more accurate over time. You shouldn't have to hand-wire every relationship.

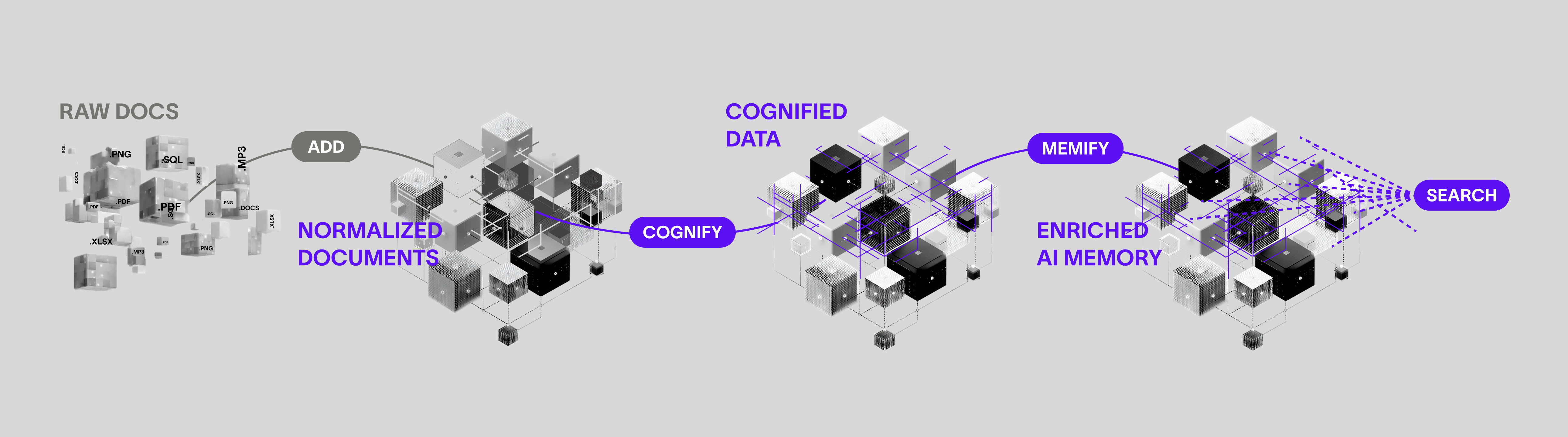

Cognee turns scattered data into a self-improving memory graph. Our ECL pipeline (Extract, Cognify, Load) ingests data from 38+ sources, structures it into a knowledge graph with embeddings and relationships, and makes it searchable. The memify layer then refines this graph through feedback loops: rated responses feed back into edge weights, so the memory gets sharper with use.

Cognee unifies three storage layers (relational, vector, and graph) into a single engine. It plugs into the tools teams already use: Claude Agent SDK, OpenAI Agents SDK, LangGraph, Google ADK, n8n, Amazon Neptune, Neo4j, and more.

What's Next

With this funding, we're doubling down on four things:

- Cloud platform. Our goal is to make AI Memory accessible at scale, so any team can add structured memory to their agents without managing infrastructure.

- Rust engine for edge devices. We want to bring memory to local and on-device agents, where latency and privacy matter the most.

- Cognitive memory research. We want to take cutting edge research to practice. We are motivated to apply cutting edge cognitive science and turn it into production-ready tools.

- Open-source acceleration. We’ll be adding multi-database support, user database isolation, new memory approaches, and 30+ new data source connectors shipping in Q1 and Q2.

Cognee is not building another enterprise platform. We're building the memory layer that makes agents intelligent, and we're keeping it open source at the core.

Let's Build What Agents Remember

Agents without memory are toys. Let's give them something to remember.

Cognee Raises $7.5M Seed to Build Memory for AI Agents

What OpenClaw is and how we give it memory with cognee